OpenResty: a Swiss Army Proxy for Serverless; WAL, Slack, Zapier and Auth

"At CloudFlare, Nginx is at the core of what we do. It is part of the underlying foundation of our reverse proxy service. In addition to the built-in Nginx functionalities, we use an array of custom C modules that are specific to our infrastructure including load balancing, monitoring, and caching. Recently, we've been adding more simple services. And they are almost exclusively written in Lua."

A while ago I started writing an identity aware proxy (IAP) to secure a binary with authentication. However, what started as a minimal auth layer has grown with features. What I have come to appreciate is that the reverse proxy is a great layer to do a variety of cross-cutting concerns like auth, at-least-once delivery and adapting. Furthermore, I have found OpenResty provides amazing performance and flexibility, AND it fits the serverless paradigm almost perfectly.

Concretely I have been working on extending the IAP to ingest and reshape signals from Slack and Zapier, tunnel them through a Write Ahead Log (WAL) and verify their authenticity all before they hit our application binary. It turns out there are huge technical advantages in doing these integrations at the proxy layer.

The first win for the proxy is as a general purpose adapter. You often need to change the shape of the JSONs being exchanged between independently developed services. Given the utility of wrapping services with a common auth layer anyway, it makes sense this is a convenient point to do the domain mapping too. With OpenResty you get to do this in a performant binary.

The second win was using the proxy to minimize response latency. Slack insists bots reply within 3 seconds. If an upstream is a serverless JVM process, you can easily timeout when upstream is cold. We solved this in the proxy layer, by buffering incoming requests into a managed queue, somewhat like a Write Ahead Log (WAL). This meant we could lower latency by replying to Slack's webhook as soon as the queue acknowledged the write. As OpenResty is c + lua, so fast to startup we do the best we can in a serverless environment.

With the WAL, we get at-least-once delivery semantics. Putting a WAL at the proxy layer can paper over a ton of upstream reliability issues. This implies that as long as upstream is idempotent, you don't need retry logic upstream. This simplifies application development, and widens the stack choice upstream. Specifically for us, it meant a slow start JVM binary did not need to be rewritten in order to be deployed on serverless.

Finally we could verify the authenticity of incoming messages fast, so that potential attacks are stopped before consuming more expensive resources upstream. Again, OpenResty is likely to be faster (and therefore cheaper) than an application server at this rote task. We found it relatively painless to store secrets in secretmanager and retrieve them in the proxy.

Overall we found that OpenResty is almost the perfect technology for Serverless. It gives you C performance (response latency as low as 5ms), fast startup times (400ms cold starts on Cloud Run) and the production readiness of Ngnix, all whilst giving you the flexibility to customize and add features to your infrastructure boundary with Lua scripting.

It's worth noting that Cloud Run scales to zero (unlike Fargate) and supports concurrent operations (unlike Lambda and Google Cloud Functions). OpenResty + Cloud Run will allow you to serve a ton of concurrent traffic on a single instance and thus I expect it to be most cost efficient out of the options. While its cold start is higher than, say, Lambda (we get 400ms vs 200ms), because it needs less scaling events, I expect incidents of cold starts to be less frequent for most deployments.

Having the proxy handle more use cases (e.g. retry logic) moves cost out of application binaries and into the slickest part of the infra. You don't need a kubernetes cluster to reap all these benefits, but you could deploy it in a cluster if you wish. We have managed to package all our functionality into a single small serverless service, deployed by Terraform, MIT licensed.

Now I will talk more specifics about how our particular development went, and how we solved various tactical development issues. This will probably be too detailed for most readers, but I have tried to order them by generalizability.

Local development with Terraform and Docker-compose

The slowness of Cloud Run deployments prevented intricate proxy feature development, so the first issue to solve the local development problem. As Cloud Run ultimately deploys dockerfiles, we used a docker-compose to bring up our binary along with a GCE Metadata Emulator.

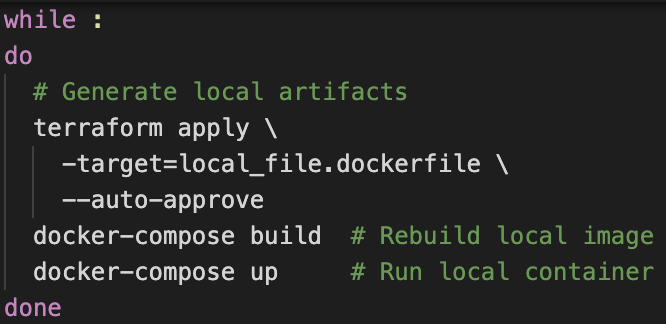

Our dockerfile is generated from Terraform templates, but you can ask Terraform to generate that local file, *without deploying the whole project*, with the `-target` flag. Thus we can create a semi decent development loop by sequencing terraform artifact generation and docker-compose in a loop, and no need to rewrite the Terraform recipe to support local development!

With the above shell script, when you press CTRL + C in the shell the binary will update and rerun. The tricky bit is exiting this loop! If you invoke it with "/bin/bash test/dev.sh" it will be named bash so you can exit with "killall bash". The Oauth 2.0 redirect_uri will not work with localhost, so you will need to copy prod tokens with /login?token=true from the prod deployment.

Adding a Write Ahead Log with Pub/Sub

To be able to confidently respond to incoming requests fast, a general purpose internal location /wal/… was added to the proxy. Any request forwarded to /wal/<PATH> was assumed to be destined to be for UPSTREAM/<PATH>, but would travel via a Pub/Sub topic and subscription. This offloads the persistent storage of the buffer to a dedicated service with great latency and durability guarantees.

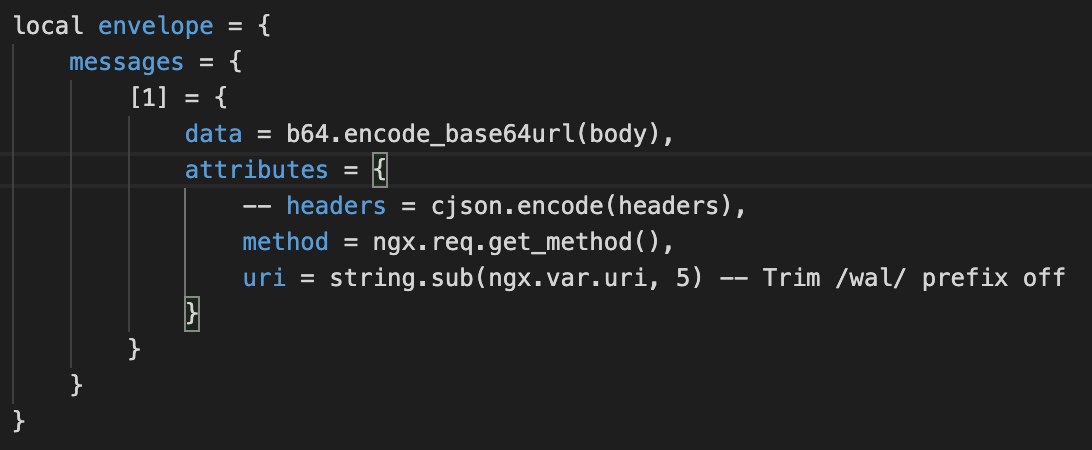

Each WAL message is essentially encapsulating a HTTP request. So the headers, uri, method and body were placed in an envelope and sent to PubSub. The request body was mapped to the base64 encoded Pub/Sub data field, and we used Pub/Sub attributes to store the rest.

Calling Pub/Sub is a simple POST request to a topic provisioned by Terraform.

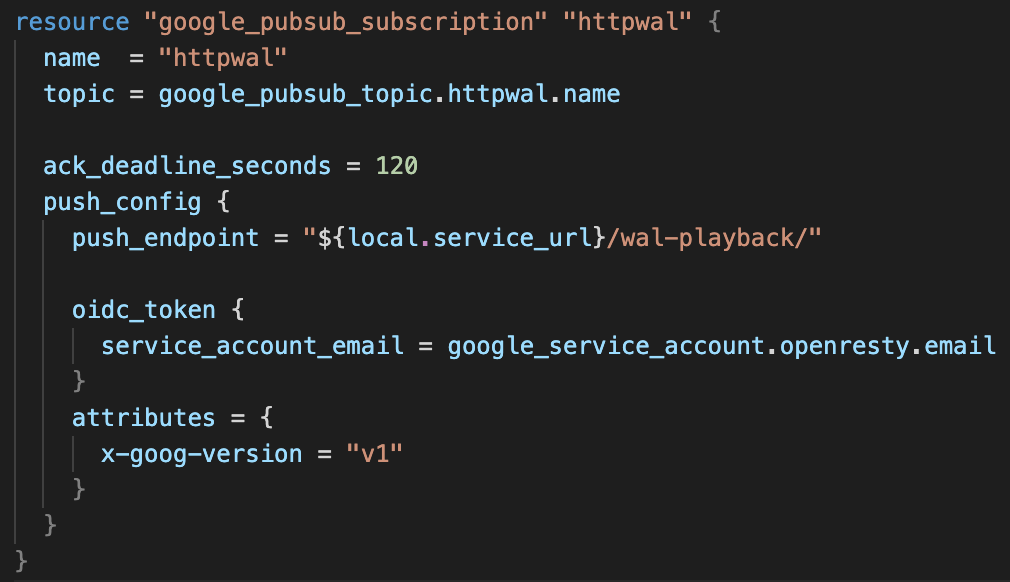

A Pub/Sub subscription was provisioned that will push the envelopes back to the proxy to location /wal-playback. By specifying an oidc_token, Pub/Sub will add an ID token that can be verified in the proxy.

In the OpenResty config we expose /wal-playback to the internet, but we verify the incoming token before unpacking the envelope and sending upstream.

For our use case our upstream was hosted on Cloud Run too. If the upstream response was status code 429 (Too Many Requests) this means the container is scaling up and should be retried. Similarly, a status code 500 means upstream is broke and the request should be retried. For these response codes the proxy returns status 500 to Pub/Sub, which triggers its retry behaviour, leading to at-least-once delivery semantics.

In our deployment the Zapier and Slack integrations used the /wal/ proxy.

Integrating Slack

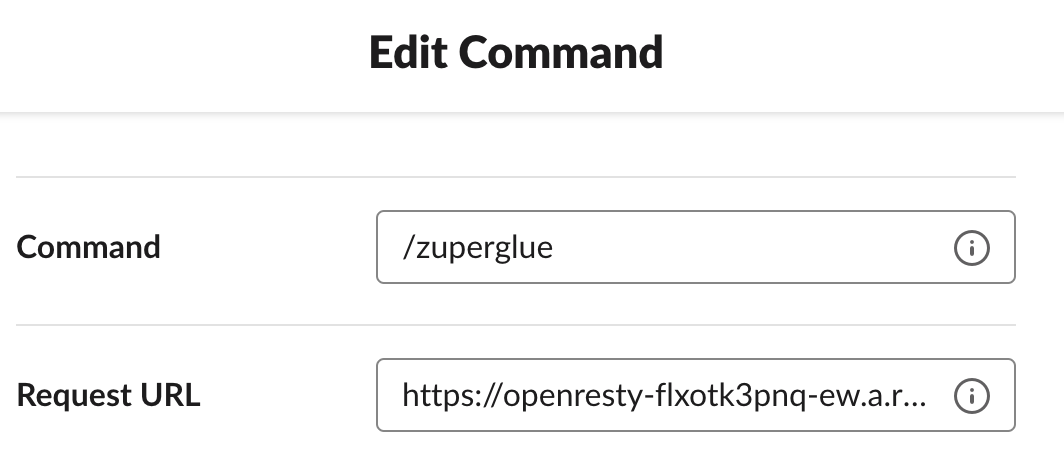

We wanted to boost internal productivity by adding custom "slash commands" to our internal business workflow engine. Creating an internal bot and regering a new command is very easy, you just need to supply a public endpoint.

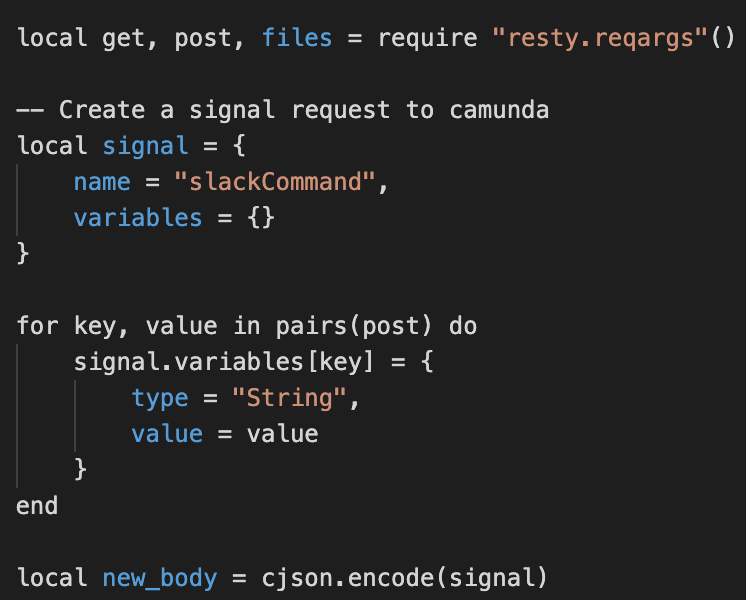

Slack sends outbound x-www-form-urlencoded webhooks. Of course, our upstream speaks JSON but it is trivial to convert using the resty-reqargs package.

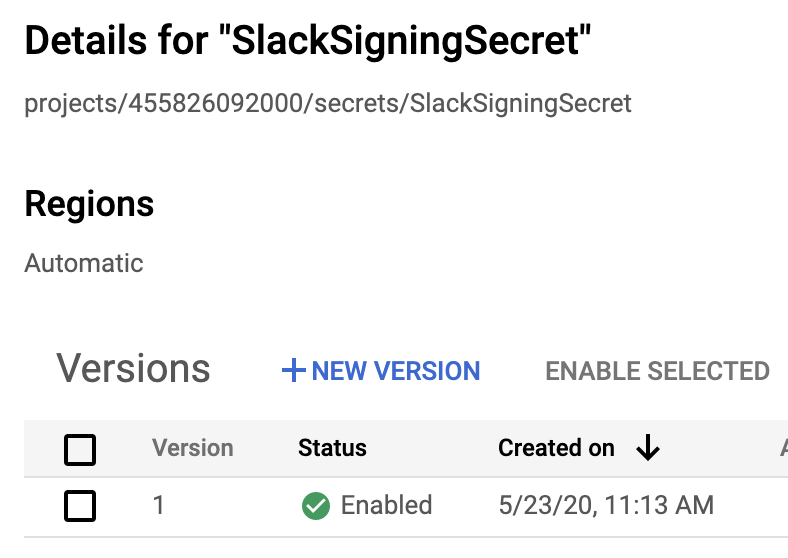

As this is a public endpoint we need to ensure the authenticity of the request. Slack usings a shared symmetric signing key. As we don't want secrets anywhere near Terraform. We manually copy the key into Google Secret Manager.

Then we only need to store the resource id of the key in Terraform. BTW, Secret Manager is excellent! You can reference the latest version so you can rotate secrets without bothering Terraform.

In the OpenResty config we fetch the secret with an authenticated GET and base64 decode. We store the secret in a global variable for use across requests.

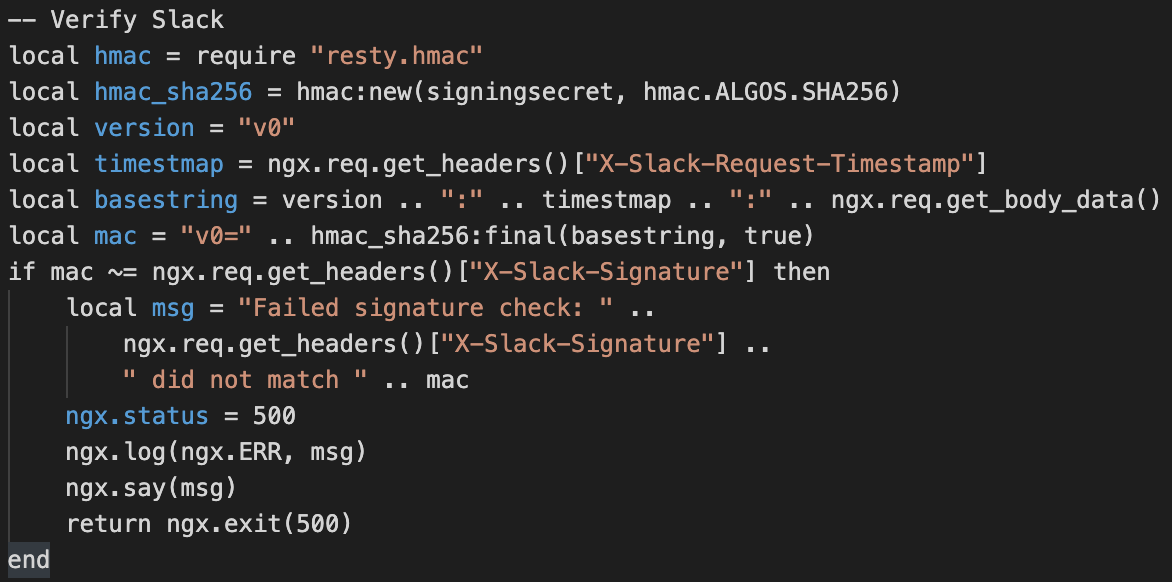

The Slack documentation is pretty good at explaining how to verify a request. Using the resty.hmac package it was only a few lines of lua:

Of course the real difficulty with Slack is the 3 second timeout requirement, so inbound slack commands were forwarded to the WAL for a quick response with at-least-once delivery semantics.

Integrating Zapier

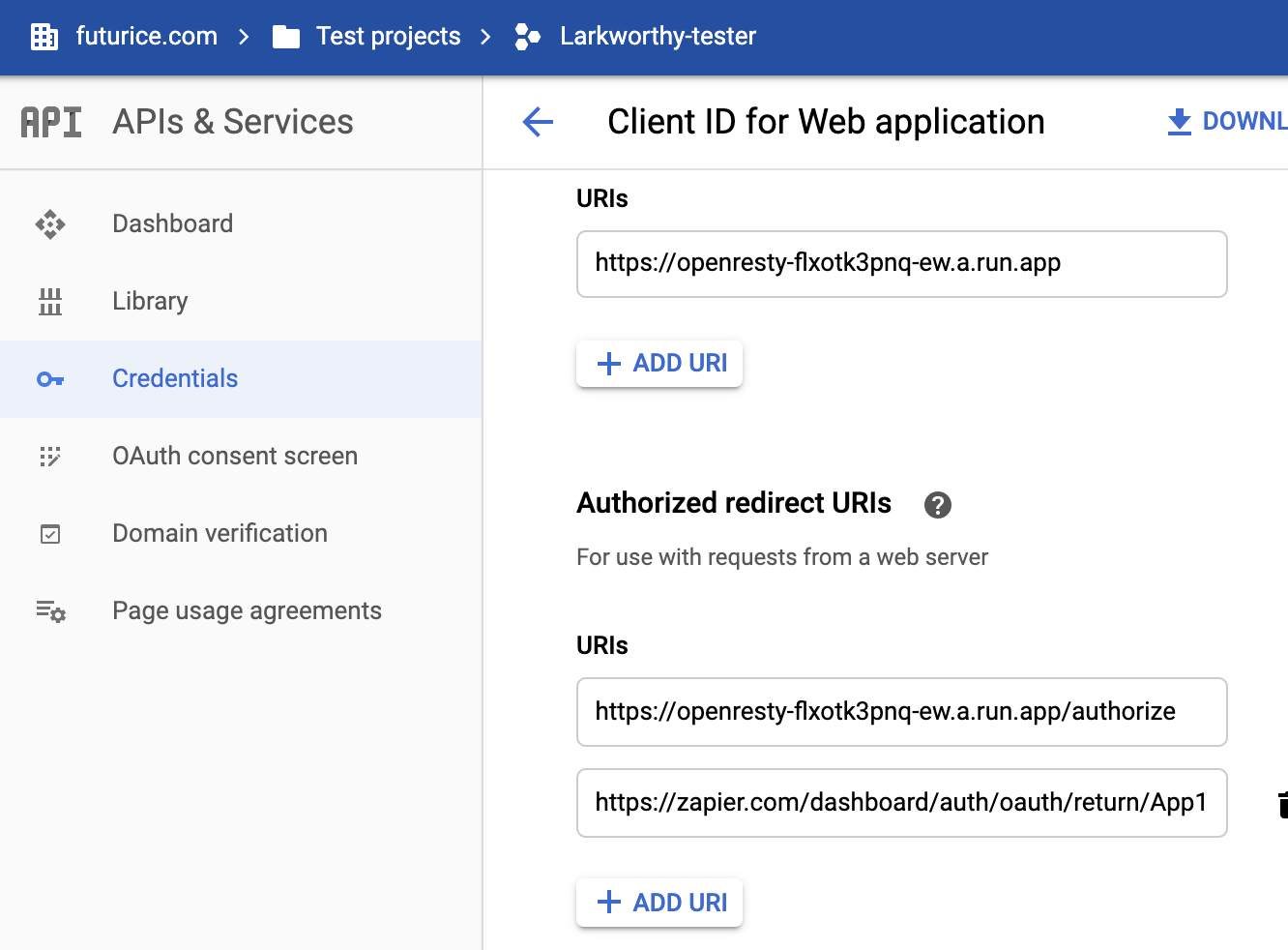

Zapier is another great bang-for-buck integration. Once you have an Identity Aware Proxy, it's simple to create an internal app that can call your APIs.

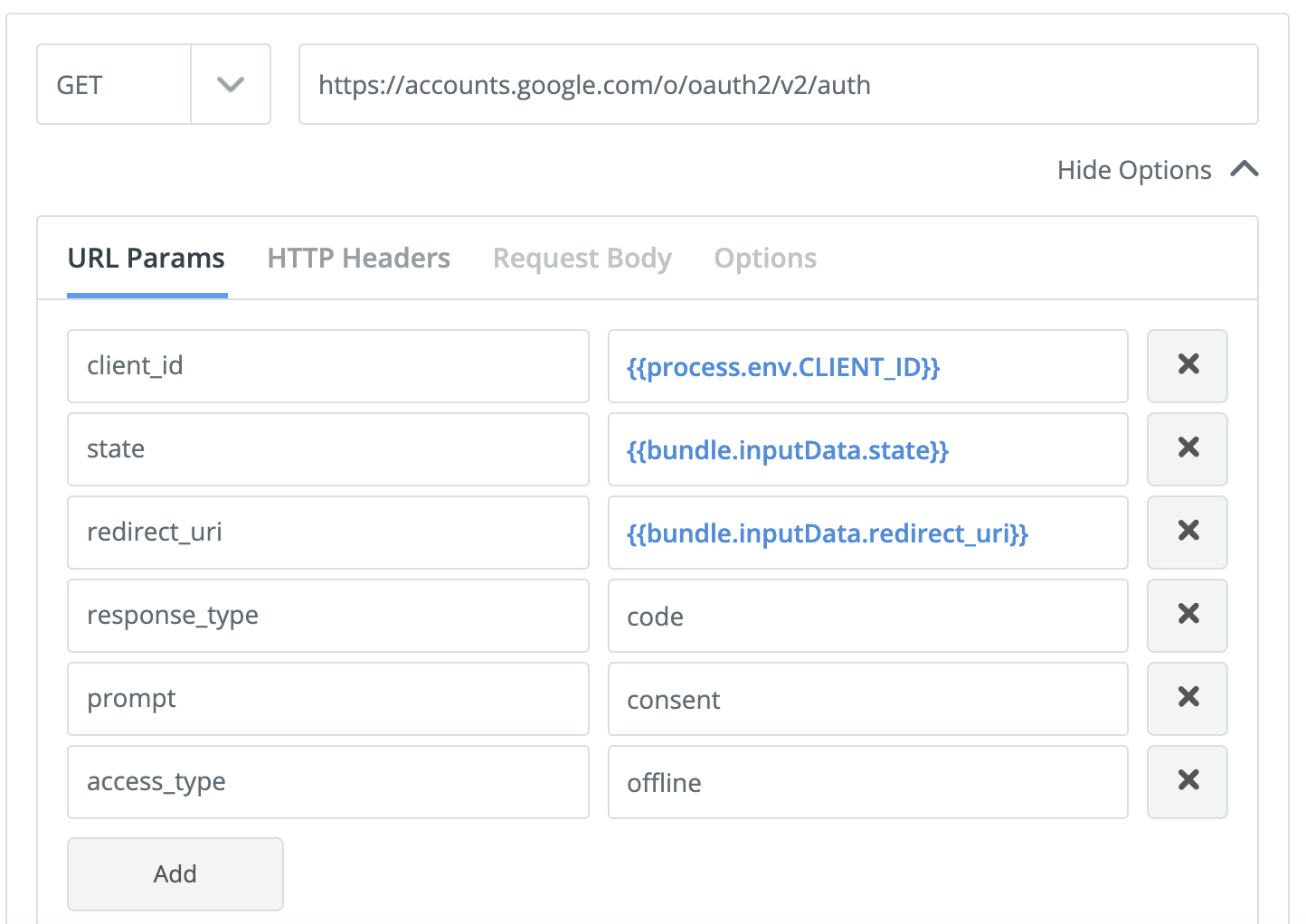

After creating a Zapier App, you need to add Zapier as an authorized redirect URL.

For the authorization to work indefinitely with Google Auth, you need to add parameters to the token request to enable offline access.

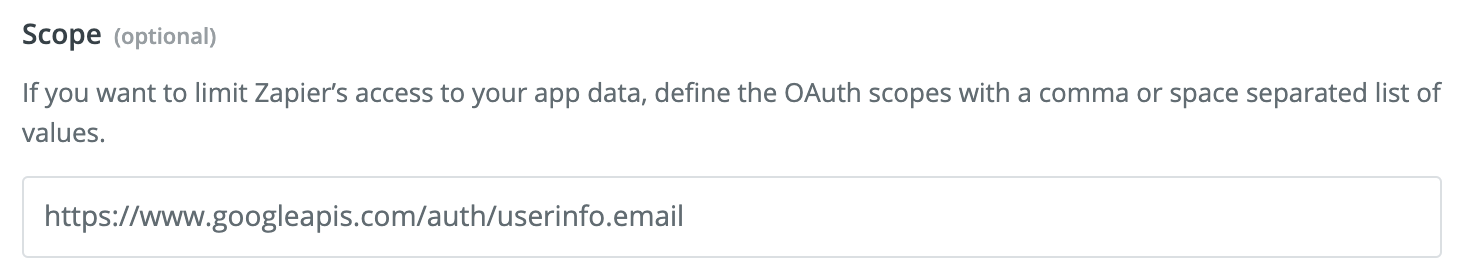

You only need email scope:

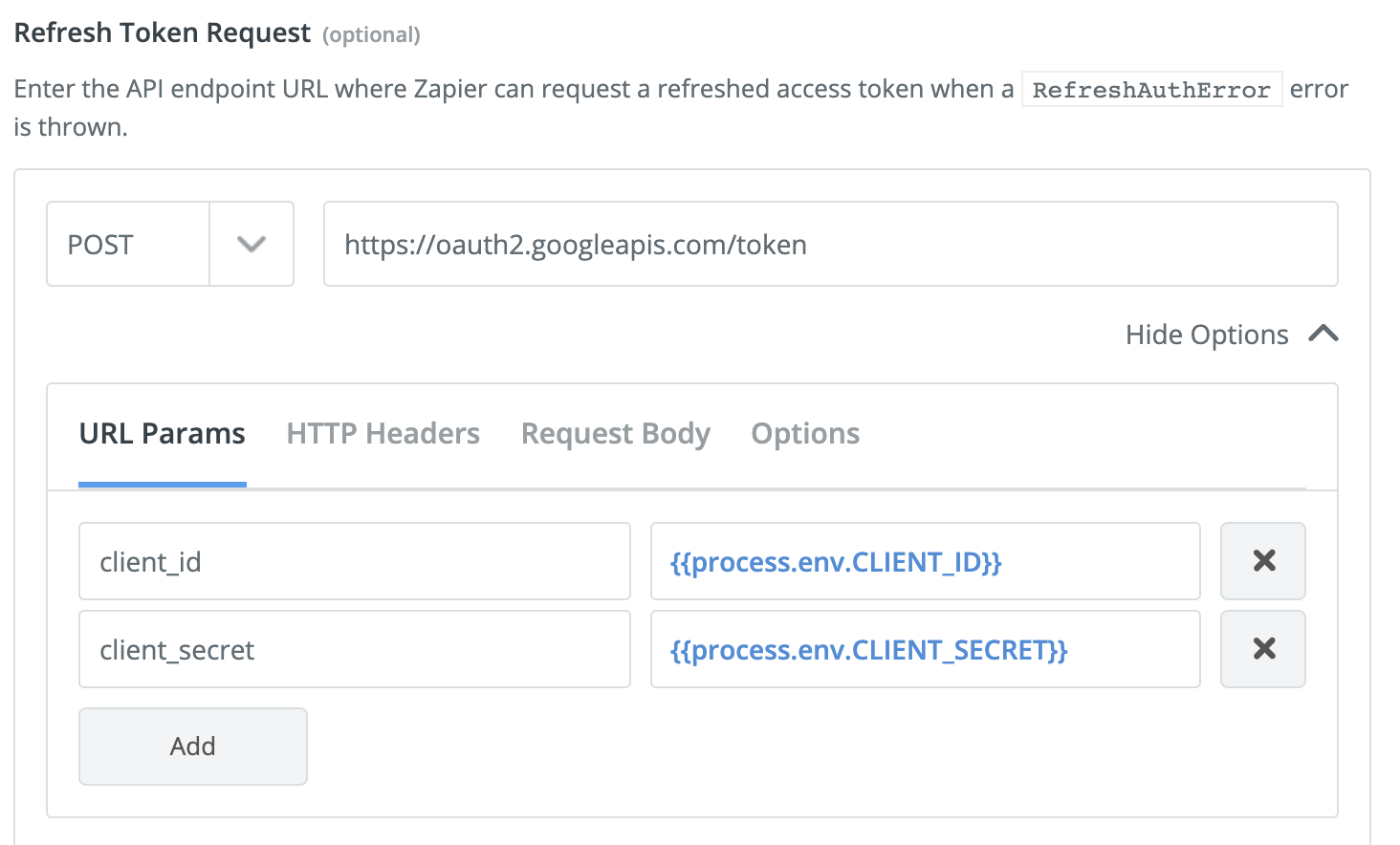

And for the refresh token endpoint to work correctly you need to add the client id and secret:

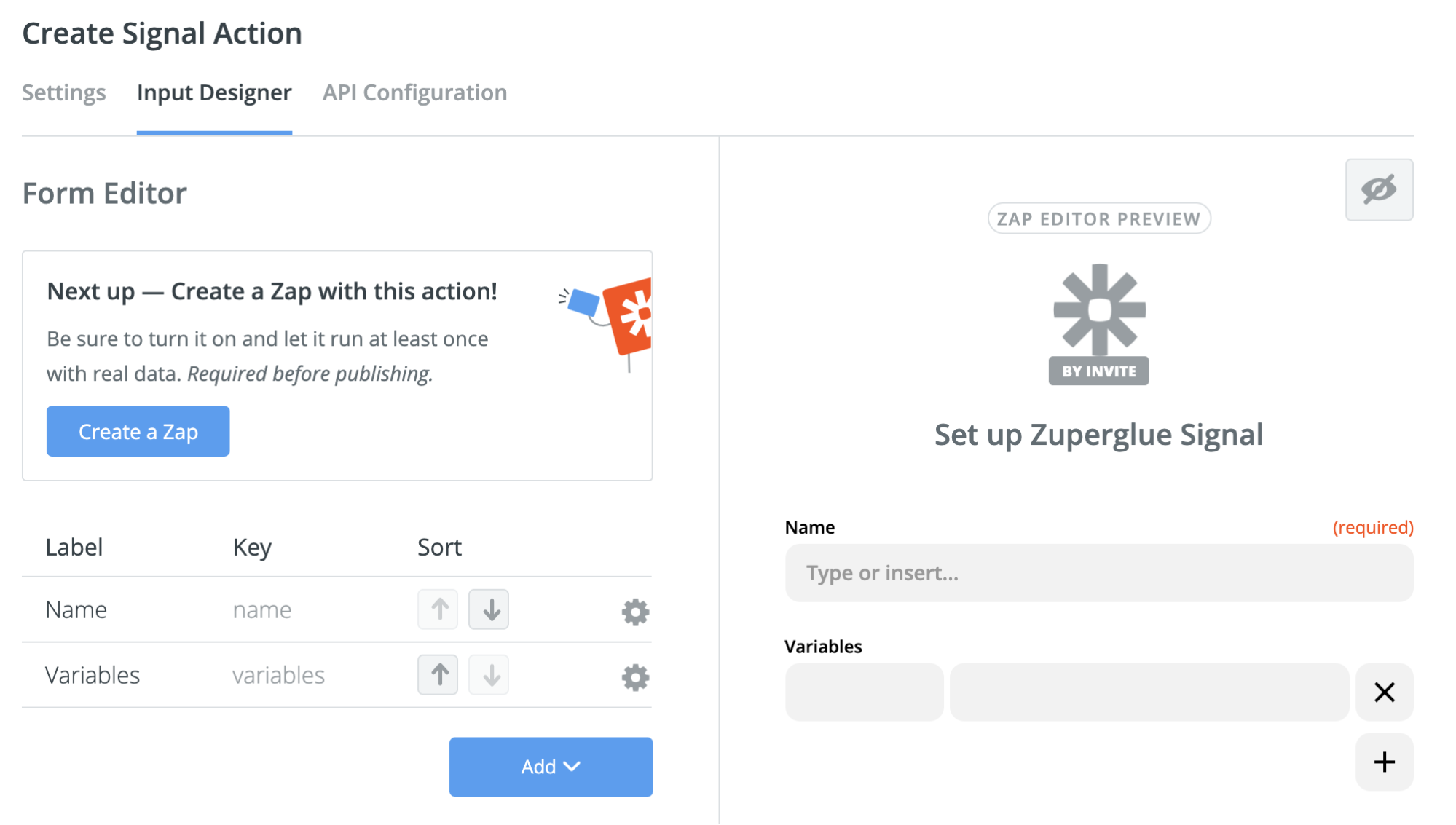

To send a signal from Zapier to our internal software, we created an Action called signal, that had a name key plus a string, string dictionary of variables. This seems like a minimal but flexible schema.

Its very nice Zapier works with Oauth 2.0, and it helped verify the correctness of our own identity implementation.

Learn more

Our internal workflow engine is being developed completely open source and MIT licensed. Read more about our vision of OpenAPI based digital process automation.

- Tom LarkworthySenior Cloud Architect