What I Learned During my Brief Existence as a Robot

1,5 years ago I operated an InMoov robot for Guerilla films documentary "Outouden laakso" (a reference to the uncanny valley, a concept examining the likeability of humanoid robots), or “Who Made You?”. Yes, spoiler alert, the white humanoid robot shown in the beginning and end of the documentary indeed had a human operator, although the children interacting with it didn’t know that.

A bit about the documentary if you’re not familiar: it was published in February 2019, shown at several venues, and generated widespread societal discourse in Finland on AI, robotics, and their ethical applications. The documentary is viewable for free on Yle Areena until 12th of July (if you’re located in Finland), and I highly recommend you watch it.

Guerilla Films approached us as they had heard the company I work at — Futurice — had built an instance of the open source humanoid robot InMoov, originally designed by Gaël Langevin. Guerilla Films thought that children interacting with a robot could make for interesting material for their documentary on the role of AI and its applications in society.

This felt meaningful to us as well. Robotics is increasingly finding new places in society, moving from industrial environments to interacting with humans. Examining these interactions in ways that are interesting to broad audiences is important for spreading knowledgeability of these technologies among every member or society. AI and robotics need not be the domain of a select few nerds. They belong to everyone. The construction of new technologies needs people across multiple disciplines, in order to understand from multiple perspectives exactly what is needed build ethical applications. I hope the documentary, and this blog post, rouse your interest to explore the topic further.

Imagine a 1st grader in place of the photographer

Imagine a 1st grader in place of the photographer

The interactions were set up as follows: we booked a 2 hour slot one morning from a school in Helsinki. Kids wrote questions for the robot beforehand, so the operator (me) could examine them, and prepare accordingly.

The morning of, we set up the robot in the school’s gym. The gym had a stage surrounded by curtains, where the robot and child were to meet. Cables ran from behind the robot to behind the curtains, all the way to the operator’s computer. I sat behind the curtains at the computer, manually typing in what the robot said to the children (thank you 10-year-old me for playing that computer game that taught me how to type with the ten finger system). I also had a few buttons to press for the robot’s gestures. Children met the robot one by one. At the end of our time slot, around 8 children all came to interact with the robot at once, so everyone could have the chance to meet it.

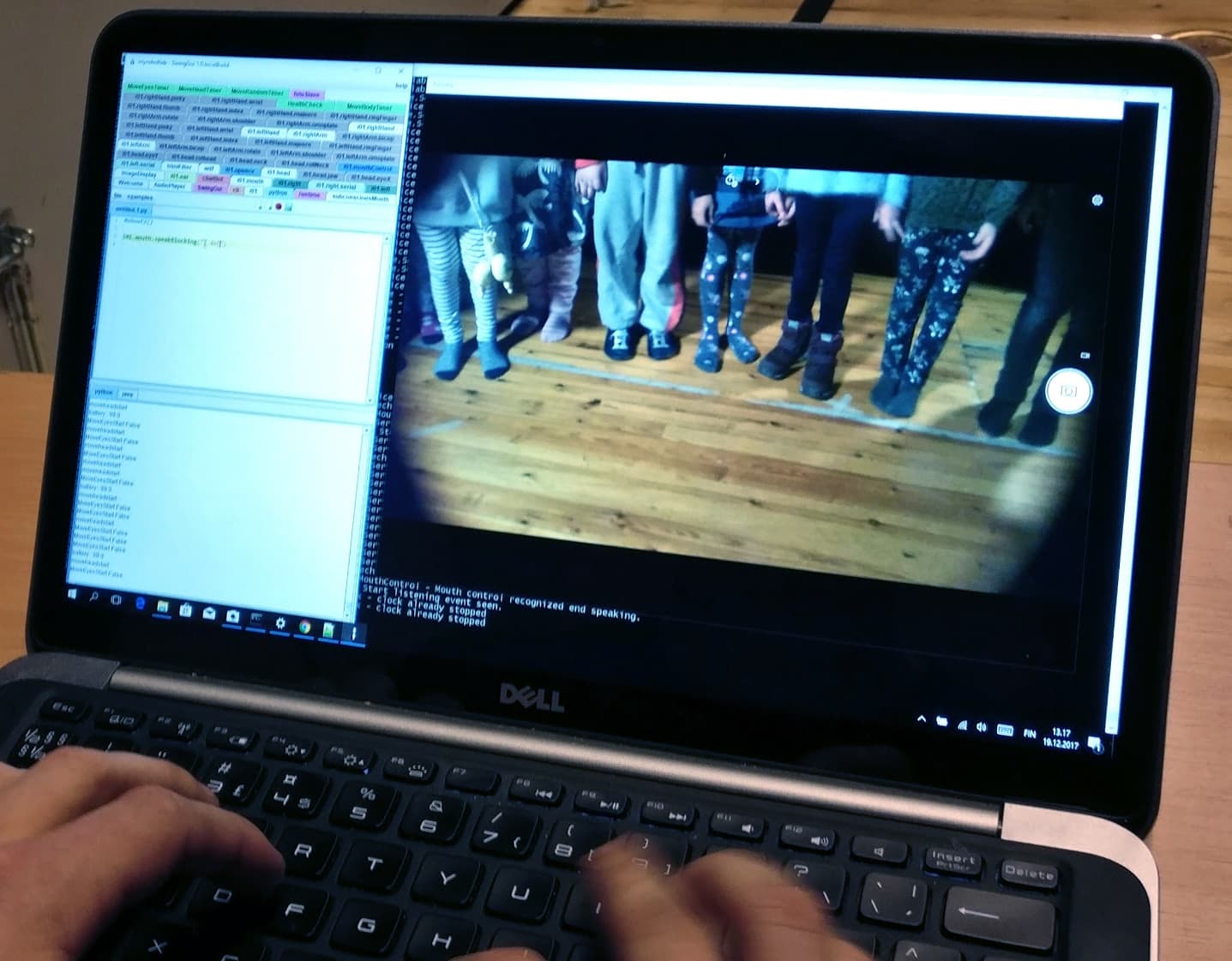

8 excited children’s feet as seen by the robot operator

8 excited children’s feet as seen by the robot operator

I left the interaction having met all the children, but not really. The children were meeting a robot.

Robot: “Do you think robots and humans are similar?” Child: “No” Robot: “Why not?” Child: “Because they’re not made of metal. The humans, they’re not made of metal.”

What I learned during my brief existence as a robot:

Children approached getting to know the robot like they would approach a new friend. They asked it what its favourite food was, if it had friends, what its family was like. Robot-specific questions — like what was the square root of a gigantic number — came later. In essence, children were extending empathy to the robot, treating it like an equal. This is positive: the children didn’t have impulses to treat the robot unfairly. This human tendency for empathy toward robots has been shown and discussed in previous studies (Darling et al., 2015; Scheutz, 2011). This is an interesting design question: what types of robots do we want to design, given that we feel empathy for them my default?

1st graders were young enough to have great suspension of disbelief. They had a willingness to believe that the robot was actually alive. Not one of them said out loud that they thought that a human was operating the robot, nor connected my sitting at the control computer behind the curtain with the robot. The presence of fiction and fantasy in human-robot-interaction, and its effect on the design choices of the roboticist, has been previously discussed (Duffy & Zawieska, 2012). What degree of fantasy is useful to the user? What degree of fantasy is ethical to preserve?

Some kids were shy, and some were not. It would be interesting to see whether the children who were more shy toward the robot, are also shy toward humans. Do they relate to robots in similar way as to humans? Do children approach robots in the same way they do unfamiliar humans? Are they suspicious of them? How about adults? The larger discussion around this topic is if we can affect the general level of trust toward robots by varying design parameters of the robot, how should the trust be calibrated for each application?

Robots could be more suitable for select users. One conversation in particular stuck in my mind for days after. It was the one prominently shown in the documentary’s opening shots. The child in question was open to talking about what they thought was the difference between humans and robots, what made robots robots, and what made humans humans. The fact that while robots live forever until they’re turned off, humans need freedom from life. Interestingly, the child’s teacher remarked that this child did not typically stand out in a traditional school environment. Something about the robot engaged the child differently than a class atmosphere. This points to the potential of robots being efficient learning tools for different types of learners.

As final words, I am happy to see critical evaluation of robotics and its applications. Here is what I could glean from the short yet valuable interactions of the first graders and a robot.

I hope the sinister hypothetical sci-fi threats of AI and robotics — while concerns that should be acknowledged and steered away from as our future — should not stop us from building useful and beneficial applications today.

Darling, K., Nandy, P., & Breazeal, C. (2015, August). Empathic concern and the effect of stories in human-robot interaction. In 2015 24th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN) (pp. 770–775). IEEE.

Duffy, B. R., & Zawieska, K. (2012, September). Suspension of disbelief in social robotics. In 2012 IEEE RO-MAN: The 21st IEEE International Symposium on Robot and Human Interactive Communication (pp. 484–489). IEEE.

Mori, M., MacDorman, K. F., & Kageki, N. (2012). The uncanny valley: The original essay by Masahiro Mori. IEEE Spectrum, 98–100.

Scheutz, M. (2011). 13 The Inherent Dangers of Unidirectional Emotional Bonds between Humans and Social Robots. Robot ethics: The ethical and social implications of robotics, 205.

Minja AxelssonDesigner

Minja AxelssonDesigner